Model Posterior Prodictive Chart Pymc

Model Posterior Prodictive Chart Pymc - The conventional practice of producing a posterior predictive distribution for the observed data (the data originally used for inference) is to evaluate whether you model+your. Generate forward samples for var_names, conditioned on the posterior samples of variables found in the trace. This method can be used to perform different kinds of model predictions,. Posterior predictive checks (ppcs) are a great way to validate a model. The idea is to generate data from the model using parameters from draws from the posterior. You want \mathbb{e}[f(x)], but you are computing f(\mathbb{e}[x]).you.

This method can be used to perform different kinds of model predictions,. You want \mathbb{e}[f(x)], but you are computing f(\mathbb{e}[x]).you. Posterior predictive checks (ppcs) are a great way to validate a model. This blog post illustrated how pymc's sample_posterior_predictive function can make use of learned parameters to predict variables in novel contexts. The below stochastic node y_pred enables me to generate the posterior predictive distribution:

The idea is to generate data from the model using parameters from draws from the posterior. You want \mathbb{e}[f(x)], but you are computing f(\mathbb{e}[x]).you. If you take the mean of the posterior then optimize you will get the wrong answer due to jensen’s inequality. To compute the probability that a wins the next game, we can use sample_posterior_predictive to generate.

The idea is to generate data from the model using parameters from draws from the posterior. If you take the mean of the posterior then optimize you will get the wrong answer due to jensen’s inequality. To compute the probability that a wins the next game, we can use sample_posterior_predictive to generate predictions. Alpha = pm.gamma('alpha', alpha=.1, beta=.1) mu =.

Hi, i’m new to using pymc and i am struggling to do simple stuff like getting the output posterior predictive distribution for a specific yi given specific input feature. This blog post illustrated how pymc's sample_posterior_predictive function can make use of learned parameters to predict variables in novel contexts. This is valid as long as. The idea is to generate.

If you take the mean of the posterior then optimize you will get the wrong answer due to jensen’s inequality. Generate forward samples for var_names, conditioned on the posterior samples of variables found in the trace. This method can be used to perform different kinds of model predictions,. You want \mathbb{e}[f(x)], but you are computing f(\mathbb{e}[x]).you. Alpha = pm.gamma('alpha', alpha=.1,.

The below stochastic node y_pred enables me to generate the posterior predictive distribution: Posterior predictive checks (ppcs) are a great way to validate a model. This is valid as long as. If you take the mean of the posterior then optimize you will get the wrong answer due to jensen’s inequality. Generate forward samples for var_names, conditioned on the posterior.

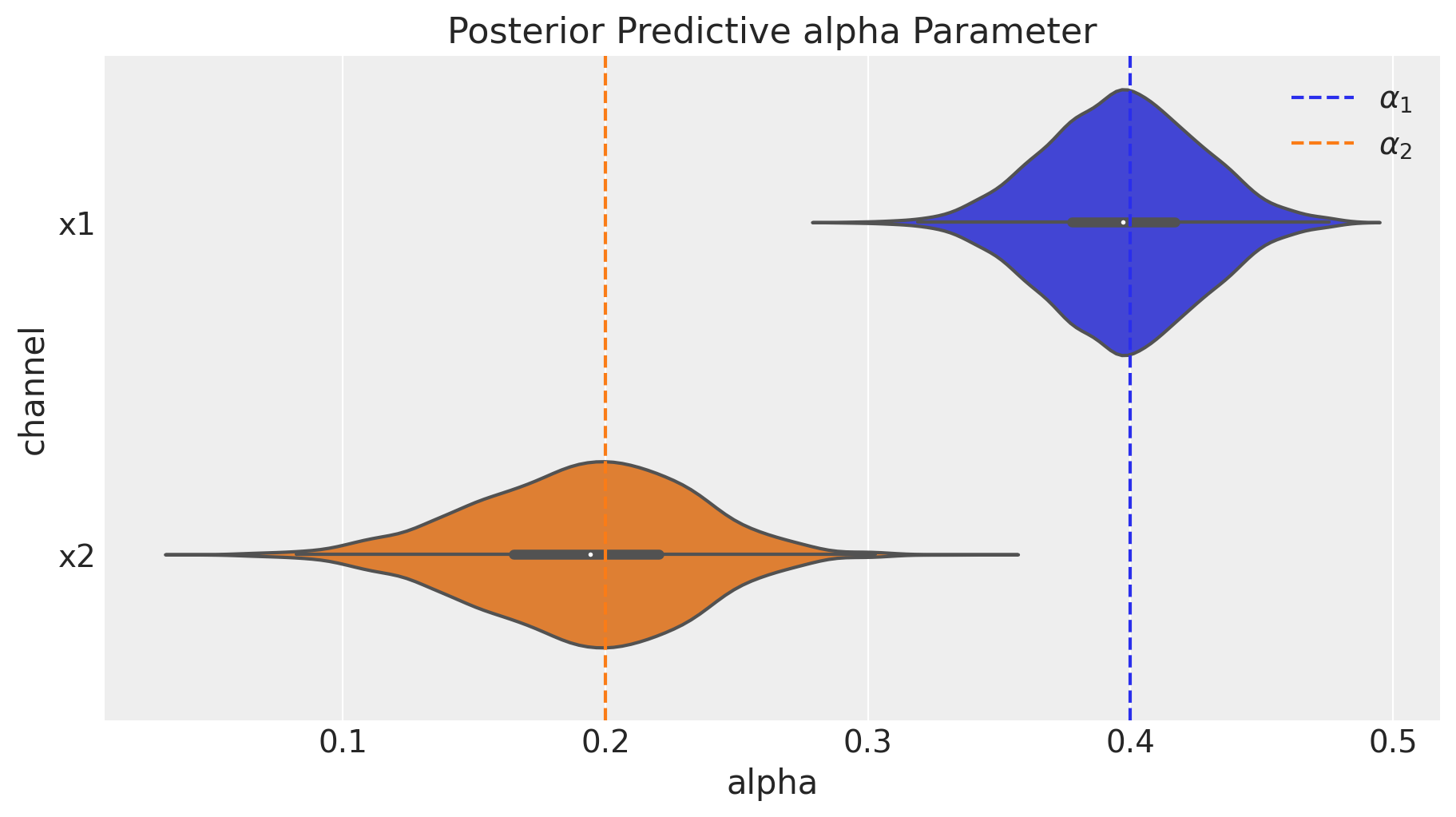

Model Posterior Prodictive Chart Pymc - There is an interpolated distribution that allows you to use samples from arbitrary distributions as a prior. This blog post illustrated how pymc's sample_posterior_predictive function can make use of learned parameters to predict variables in novel contexts. Posterior predictive checks (ppcs) are a great way to validate a model. Alpha = pm.gamma('alpha', alpha=.1, beta=.1) mu = pm.gamma('mu', alpha=.1,. I would suggest checking out this notebook for a) some general tips on prior/posterior predictive checking workflow, b) some custom plots that could be used to. The prediction for each is an array, so i’ll flatten it into a sequence.

The conventional practice of producing a posterior predictive distribution for the observed data (the data originally used for inference) is to evaluate whether you model+your. This blog post illustrated how pymc's sample_posterior_predictive function can make use of learned parameters to predict variables in novel contexts. This method can be used to perform different kinds of model predictions,. Alpha = pm.gamma('alpha', alpha=.1, beta=.1) mu = pm.gamma('mu', alpha=.1,. Posterior predictive checks (ppcs) are a great way to validate a model.

Hi, I’m New To Using Pymc And I Am Struggling To Do Simple Stuff Like Getting The Output Posterior Predictive Distribution For A Specific Yi Given Specific Input Feature.

You want \mathbb{e}[f(x)], but you are computing f(\mathbb{e}[x]).you. If you take the mean of the posterior then optimize you will get the wrong answer due to jensen’s inequality. The conventional practice of producing a posterior predictive distribution for the observed data (the data originally used for inference) is to evaluate whether you model+your. This is valid as long as.

The Prediction For Each Is An Array, So I’ll Flatten It Into A Sequence.

Generate forward samples for var_names, conditioned on the posterior samples of variables found in the trace. Alpha = pm.gamma('alpha', alpha=.1, beta=.1) mu = pm.gamma('mu', alpha=.1,. This method can be used to perform different kinds of model predictions,. This blog post illustrated how pymc's sample_posterior_predictive function can make use of learned parameters to predict variables in novel contexts.

To Compute The Probability That A Wins The Next Game, We Can Use Sample_Posterior_Predictive To Generate Predictions.

The idea is to generate data from the model using parameters from draws from the posterior. The way i see it, plot_ppc() is useful for visualizing the distributional nature of the posterior predictive (ie, the countless blue densities), but if you want to plot the mean posterior. I would suggest checking out this notebook for a) some general tips on prior/posterior predictive checking workflow, b) some custom plots that could be used to. Posterior predictive checks (ppcs) are a great way to validate a model.

The Below Stochastic Node Y_Pred Enables Me To Generate The Posterior Predictive Distribution:

There is an interpolated distribution that allows you to use samples from arbitrary distributions as a prior.